Testing the model API

After you deploy the model, you can test its API endpoints.

Procedure

-

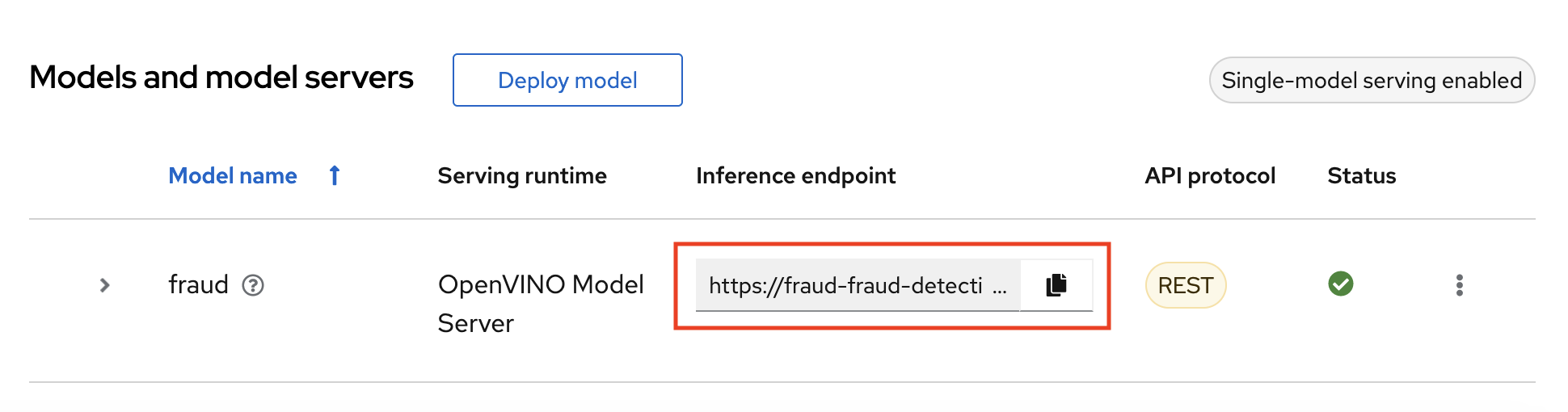

In the OpenShift AI dashboard, navigate to the project details page and click the Deployments tab.

-

Take note of the model’s Inference endpoint URL. You need this information when you test the model API.

If the Inference endpoint field has an Internal endpoint details link, click the link to open a text box that shows the URL details, and then take note of the restUrl value.

NOTE: When you test the model API from inside a workbench, you must edit the endpoint to specify

8888for the port. For example: -

Return to the JupyterLab environment and try out your new endpoint.

Follow the directions in

3_rest_requests.ipynbto try a REST API call.

Next step

(Optional) Automating workflows with AI pipelines