Deploying a model on a multi-model server

OpenShift AI multi-model servers can host several models at once. You create a new model server and deploy your model to it.

-

A user with

adminprivileges has enabled the multi-model serving platform on your OpenShift cluster.

-

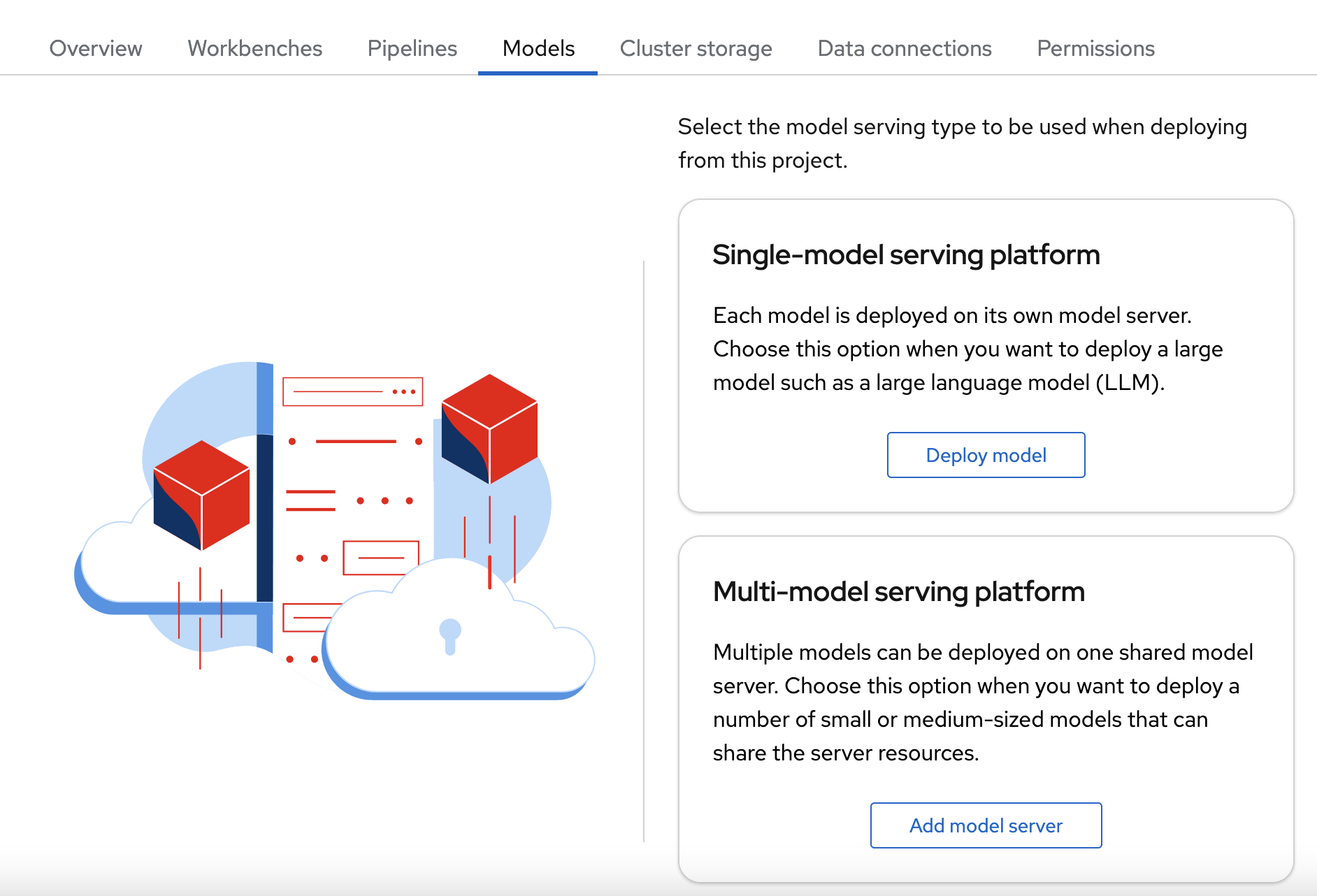

In the OpenShift AI dashboard, navigate to the project details page and click the Models tab.

Depending on how model serving has been configured on your cluster, you might see only one model serving platform option. -

In the Multi-model serving platform tile, click Select multi-model.

-

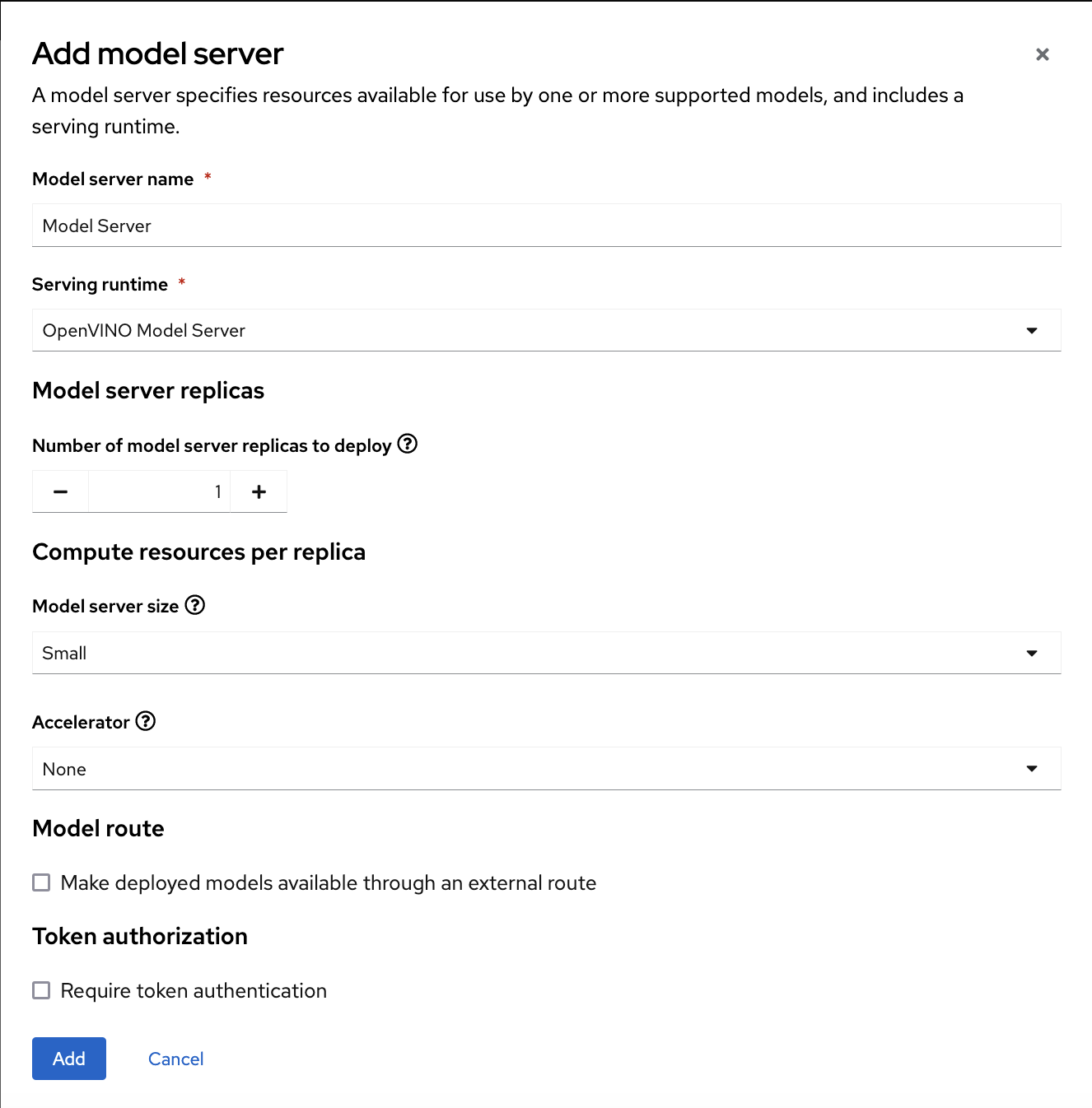

In the form, specify the following values:

-

For Model server name, type a name, for example

Model Server. -

For Serving runtime, select

OpenVINO Model Server. -

Leave the other fields with the default settings.

-

-

Click Add.

-

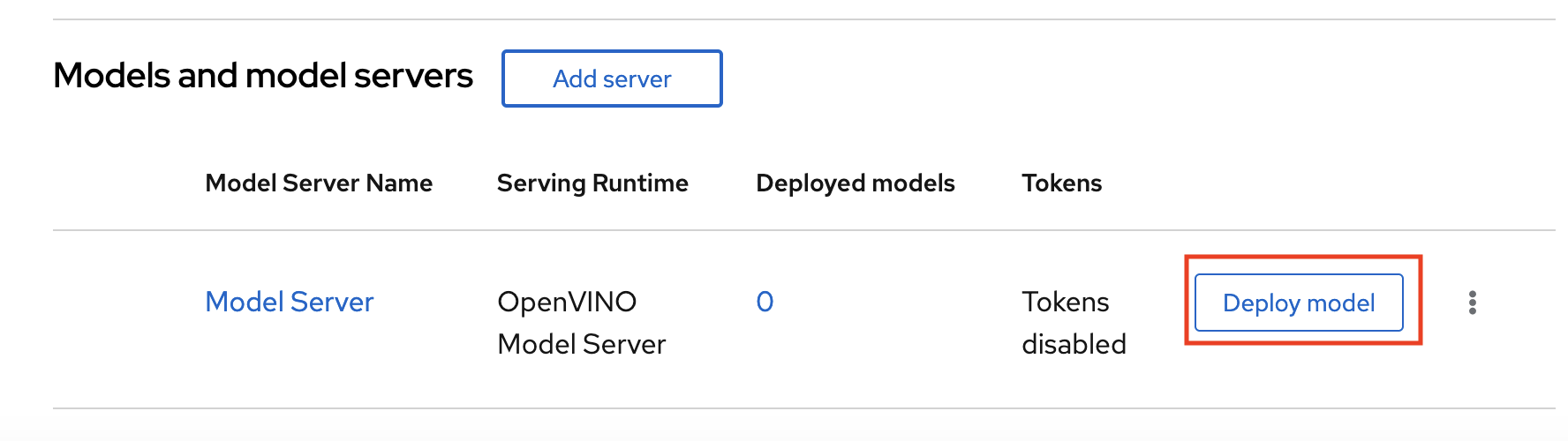

In the Models and model servers list, next to the new model server, click Deploy model.

-

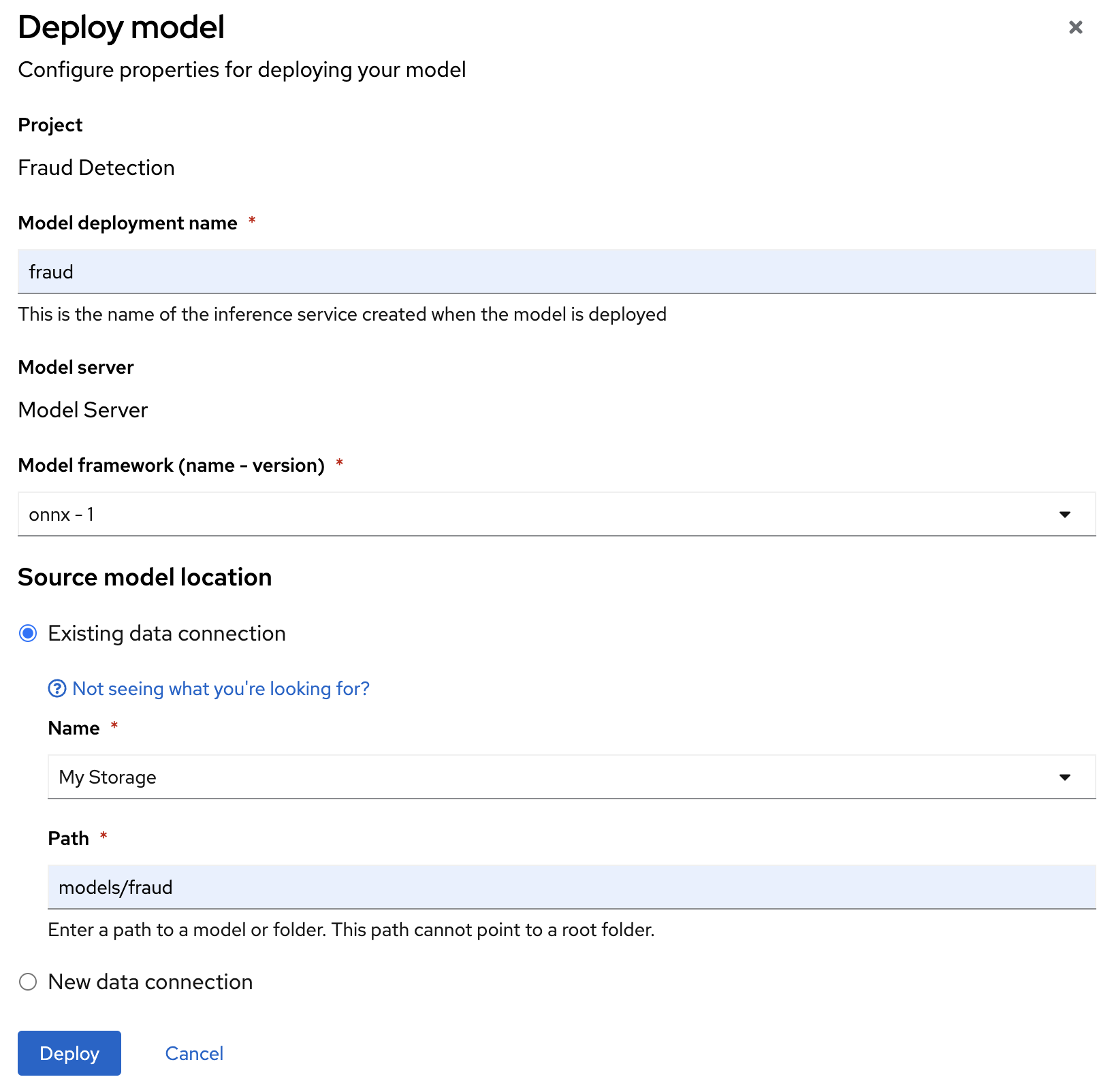

In the form, specify the following values:

-

For Model deployment name, type

fraud. -

For Model framework (name - version), select

onnx-1. -

For Existing connection, select

My Storage. -

Type the path that leads to the version folder that has your model file:

models/fraud -

Leave the other fields with the default settings.

-

-

Click Deploy.

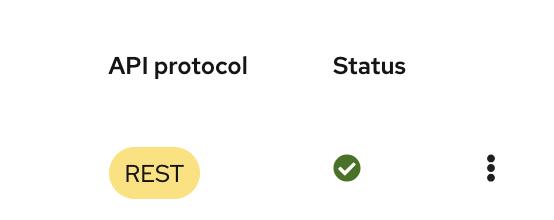

Notice the loading symbol under the Status section. The symbol changes to a checkmark when the deployment completes successfully.