Distributing training jobs with the Training Operator

The Training Operator is a tool for scalable distributed training of machine learning (ML) models created with various ML frameworks, such as PyTorch.

NOTE: Distributed training in OpenShift AI uses the Red Hat build of Kueue for admission and scheduling. Before you run a distributed training example in this tutorial, complete the setup tasks in Setting up Kueue resources.

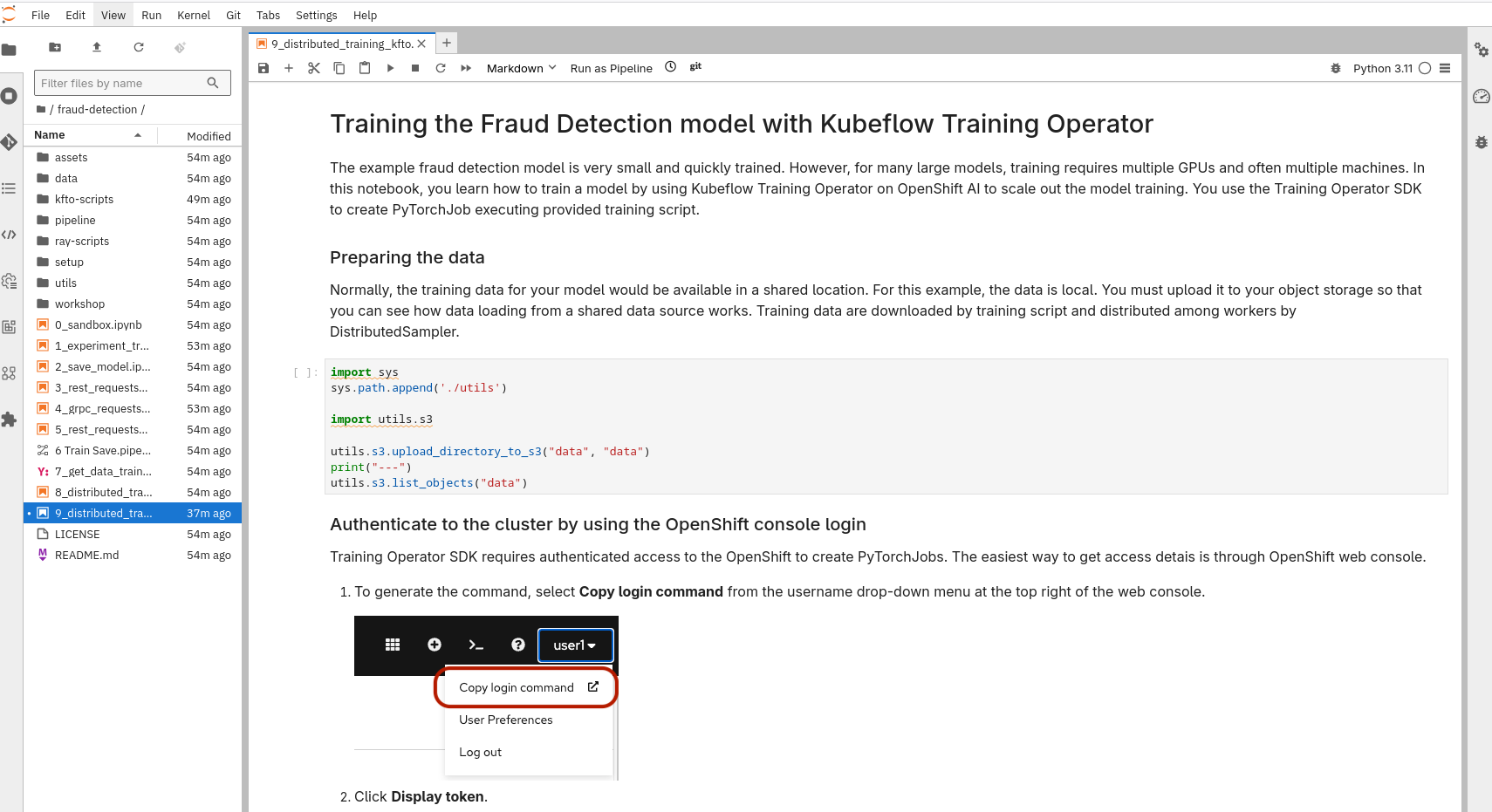

In your notebook environment, open the 7_distributed_training_kfto.ipynb file and follow the instructions directly in the notebook. The instructions guide you through setting authentication, initializing the Training Operator client, and submitting a PyTorchJob.

You can also view the complete Python code in the kfto-scripts/train_pytorch_cpu.py file.

For more information about PyTorchJob training with the Training Operator, see the Training Operator PyTorchJob guide.